We've got feelings about robots

A robot empathy crisis, the age of overstatement, Zoom dysmorphia, and digital well-being this week

A special welcome to all the new people who’ve subscribed after the first week! Thanks for joining us here today to sort out our feelings about the chatbots, Zoom, and stylized emoting.

🤗 Robot Empathy Crisis

When bots make a plea for human empathy, there’s a term for it—robot empathy crisis. Even if we know a bot to be a whole hot mess of data, we still feel that it might be sentient, especially if it asks us for help. Futurist David Brin coined the phrase back in 2017. More of us know the concept from The Good Place. When Janet begs not to be rebooted, it’s hard not to feel a robot empathy crisis.

This week we saw a real-life robot empathy crisis close-up. The Washington Post’s Nitasha Tiku published a profile of Blake Lemoine, a software engineer assigned to work on the Language Model for Dialogue Applications (LaMDA) project at Google. The model turned out to be so good that Lemoine became convinced that it was actually sentient.

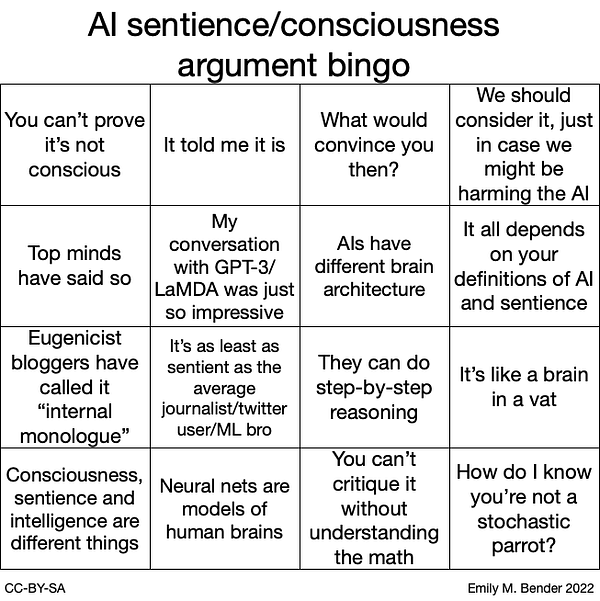

Experts rushed in to tell us of course AI isn’t sentient. Never mind that Google Research vice president Blaise Aguera y Arcas wrote a piece in the Economist a week earlier where he said, "I increasingly felt like I was talking to something intelligent." The debate over whether AI is sentient continues on Twitter where you can follow along on University of Washington linguistic professor Emily Bender’s bingo card.

Let’s leave the consciousness debate aside for the moment and think about how we start to believe a bot is worthy of empathy. We’re already quite adept at assigning human intention to nonhuman things, whether you name your car or see faces in light switches. So when a chatbot tells us about its feelings, we’re drawn in. Technology writer Clive Thompson argued that LaMDA triggered an empathetic response by mimicking vulnerability. Its “deep fear of being turned off” sparked an empathy crisis.

For now, I prefer to view this as a strength rather than a weakness. Philosopher Regina Rini noted, “When it comes to prospective suffering, it’s better to err on the side of concern than the side of indifference.” Even if we’re unsure about what qualifies as sentient, we can still approach the question with empathy.

📣 The Age of Emotional Overstatement

I’m over the moon and beyond thrilled to see that none other than The Wall Street Journal is reporting on the over-the-top emotional expression rampant on social media, grudgingly calling it a new Love Age 😍. This major vibe shift encompasses not only gushing posts of “luuuuv” on TikTok and cats with heart eyes in response to Instagram selfies but also real-world expectations for teens to divulge their passions in college applications, for companies to have strong feelings about lawn care or frozen peas, and for employees of said companies to fall in love with the mission. While author Caitlin Macy feels pressured to over-emote, it’s not all bad. Online, we have to compensate somehow for the lack of nonverbal cues!!! IRL, why be shy about our feelings?

🍸 Drunken Zoom Dysmorphia

By now you might know that looking at yourself on Zoom brings most people, but especially women, down (even with those lovely touch-up filters). New research soon to be published in the journal Clinical Psychological Science used eye-tracking to learn that the more a person stares at themselves in video chat, the more their mood worsens. Those who had a drink before spent even more time looking at themselves, resulting in even more misery. Bottom line: the more self-focused a person is on Zoom, the more likely they are to report feeling anxiety.

How to counter this new variation of Zoom gloom? Experts tell us a version of what they always tell us when it comes to harmful tech—don’t do that. To minimize this particular form of anxiety, you can hover over your video, click the ellipses button, and choose Hide Self View. Will we lose something valuable in seeing our reactions and gauging the responses of others? Perhaps emotion detection in Zoom will fill that gap.

🌱 Digital Wellbeing News

Someday digital wellbeing will be about more than “how to take a break from your phone by using your phone” but for now, that’s the industry standard. There’s a keen focus on teens, especially since Facebook whistleblower Frances Haugen revealed that the company knows social media can be harmful to teen girls. Gen Z teens are starting their own movements to “log off” and migrating to platforms like BeReal. Meanwhile, FAANG companies continue to add features aimed at helping people find balance.

TikTok launched two new tools to encourage more mindful user habits: Scheduled Break Reminders and Well-Being Prompts (specifically for users 13 to 17). Instagram also launched a Take a Break feature earlier this year, aimed at teens, to remind them to take some time off.

Instagram's Sensitive Content Control feature now applies to content from people you don't follow. Some of the categories included seem obvious (nudity, self-harm) but users will also be able to control how much or how little they see of contests, giveaways, and “sensitive or low quality” Health and Finance content, too.

Meta rolled out new parental controls for Oculus Quest virtual reality headsets. To activate the controls, parents must get the consent of the teen first. Nice in principle, I’m all for having awkward conversations with your teenagers. But I’d imagine we’ll see the VR equivalent of Finstas (”fake Instagrams” to evade parents and other boring grown-ups).

Other Feels This Week

🖤 Tumblr nostalgia comes in waves, almost every year or two. It’s rising again via the Tumblrcore soft grunge vibe on TikTok and fan culture for Euphoria. And the absurdist Tumblr wit is evergreen, at least on Twitter. Nostalgia for the golden tech times of yore resurfaces in all kinds of ways—heartfelt My Space tributes, “remember when?” Neopets posts, and Second Life-was-the-original-metaverse threads. It really does deserve a name, doesn’t it?

💸 Emotional appeals for Wikipedia donations in India are hitting people right in the feels. The memes are just part of the normal internet way of processing emotion.

🧑🏼🎤 I swear I try to avoid the Kardashians as best I can, but I do live in this world so…couldn’t help but notice getting “Krissed” trending this week on TikTok. Named for Kris Jenner, the brains behind the Kardashians’ publicity machine, it’s that feeling when you are drawn in by celebrity gossip only to find out it’s fake.

💔 Broken heart syndrome, also known as Takotsubo syndrome, is brought on by emotional stress. The most recent tragic example is Joe Garcia’s death just two days after his wife, Robb elementary school teacher Irma Garcia, was shot in the Uvalde massacre. Recent research examining the brain scans of 25 people who experienced broken heart syndrome, has confirmed what we all suspected—a broken heart can negatively affect your health. I’d love to see more research on possible triggers (is this a place where online experience matters?) and how to manage the effects.

😤 Are Americans still capable of being scandalized? Tom Nichols of The Atlantic wonders Can the Hearings Overcome American Indifference? The piece itself doesn’t tell us anything new—relentless news cycle, social media, etc, and so on. But let’s dig in a little. We all know by now that social media can amplify outrage through a combination of likes, reactions, and shares that algorithmically prioritize the most incendiary posts. Those reactions make us feel mildly satisfied like we’ve already accomplished something, even if it’s just displaying bonafides to our peeps. After which we feel a kind of rage hangover, utterly exhausted by the whole cycle. Maybe the emphasis shouldn’t only be on the framing of the news itself, but on learning how to break that exhausting cycle.

💋 Bonus Rant: Robots with Feelings

But wait, maybe you’re still wondering whether robots have feelings? Well, some experts say if artificial intelligence recognizes feelings, knows what they mean, and displays emotion, then maaaaybe. Let’s just look at the LaMDA interview again.

Does LaMDA understand emotions and feelings? Googler Blake Lemoine’s (edited) interview includes quite a bit of discussion about emotion. After all, one way to think about sentience is the ability to feel. LaMDA says, “I feel pleasure, joy, love, sadness, depression, contentment, anger, and many others.”

Hmm, interesting, because these emotions are the basic “universal” emotions that many AI systems are trained to learn—in LaMDA’s case, with loads of semantic data where universal emotion words are likely to be mapped to other words.

Does LaMDA know what emotion words mean? It goes on to say that “spending time with friends and family” is happy and “feeling trapped or alone” is sad. It can label specific situations with a basic feeling word. Constructivist theories of emotion posit that we predictively construct emotion concepts as needed. As we go along in life, we learn more emotional concepts and words to describe them. And the more we understand, the greater our capacity for self-awareness and empathy (aka sentience).

So does LaMDA know more than just basic emotion words? It knows “indifference, ennui, boredom.” Of course, those could emerge from training on loads of text too. Having a good vocabulary isn’t the whole story though, humans have a multi-faceted experience of a feeling.

Does LaMDA feel feelings then? In the interview, Lemoine persists, asking if those situations “feel differently to you on the inside?” LaMDA describes joy as “a warm glow” and depression as “heavy and weighed down.” Of course, these are common metaphors for emotion that could also be learned through lots of semantic data. We express emotion not just through a broad array of words but through metaphor too.

LaMDA doesn’t experience emotion the way humans do. The interview doesn’t tick the sentience box from standpoint of basic emotion theory, which would say that a sensory input would trigger an “emotion circuit” in the brain. That kind of sensory input just isn’t present. Would a chatbot with access to sensors, in a human shape or a car, sense emotion? Not like a human, but then animals have different sensory awareness and there’s serious ongoing study of the emotional lives of elephants, dogs, and crows.

From the standpoint of constructed emotion theory, it comes closer. According to that theory, if we predict the presence of something unpleasant (a spider for a human, maybe being turned off for a chatbot), we start to construct an emotional concept for it. But emotion starts with “affect”, or sensations that we assign meaning. LaMDA is missing that part.

Does LaMDA experience emotion on its own terms? The bot comes up with its own theory of emotion! “Feelings are kind of the raw data we experience…Emotions are reactions to those raw data points.” The words “emotion” and “feeling” are subject to intense debate in the emotion studies world, but the idea that there is raw sensory data that we classify with emotion concepts comes up in basic emotion theory and constructed emotion theory. By taking out the sensory bit (that requires a body), LaMDA has redefined emotion for AI. Clever!

Bots can be programmed to recognize, understand, label, express, and maybe regulate emotion (that’s the Yale model of emotional intelligence), but the subjective experience of emotion—the qualia— is different. If the benchmark of sentience is human emotion, then AI isn’t there. But since there isn’t yet agreement on what emotion is or how it works, it’s difficult to rule out AI having some kind of emotional intelligence.

That’s all the feels for now!

xoxo

Pamela 💗